Common Screen Resolutions for Mobile Testing in 2025

Erin Bailey

If you build or ship a mobile app, you already know the uncomfortable truth about mobile app testing: it is the last mile that breaks teams. Devices drift. OS updates surprise you. Automation flakes. Testing becomes the release bottleneck for mobile testing. And when the testing platform itself is not rock-solid, every other improvement you want to make gets throttled.

In 2025, we focused on fixing that problem at the foundation.

This post is our year-in-review for 2025. It is a story about execution, not hype. It is also a thank you. To customers who pushed us with real requirements. To employees who built with discipline. And to partners who helped us deliver a better end-to-end experience for mobile application testing across real devices.

Before anything else: thank you.

We take the responsibility seriously: your mobile releases depend on the reliability of our platform.

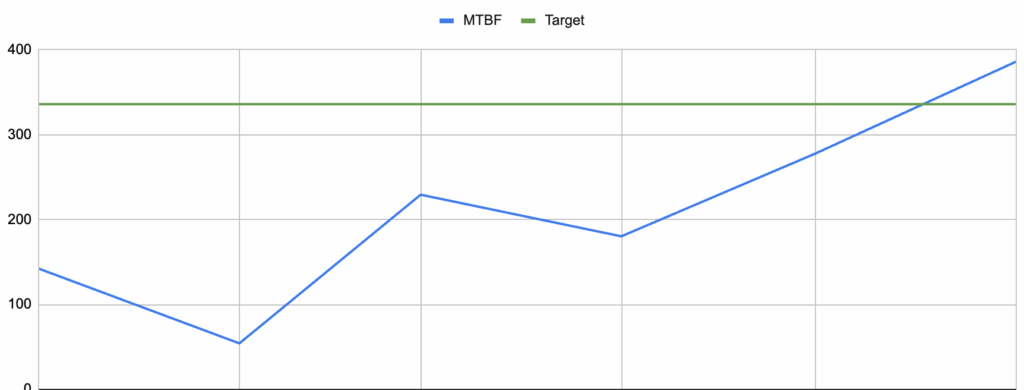

At the start of the year, we set two top-level goals that were easy to understand and hard to fake:

We reached cash-flow positive and growing in May. We reached the MTBF goal in June.

Those two outcomes are connected. A mobile testing company that cannot deliver reliability and repeatability is not in a position to earn long-term trust. And a company that cannot fund its own operations is not in a position to invest in meaningful innovation.

I briefed a leading VC in September on our financial performance. He said, “Frank, you are an anomaly in your profitability.” That was reassuring.

Reliability is not a feature, but it is the prerequisite for every feature that matters.

In 2025, we invested heavily in reducing “platform friction” in the places teams feel it most: device availability, session stability, upgrades, provisioning, and compatibility with the never-ending new (sometimes not stable) releases of iOS and Android.

Unlike a lot of competitors like BrowserStack, Sauce Labs, and LambdaTest, Kobiton provides our customers the ability to setup their own device labs. We can support over 10,000 mobile devices on a single deployment. When working with customers at that scale, every feature that makes managing devices at this scale spread across the globe is critical. A few examples from 2025 releases on improvements in this area:

If you run iOS devices at scale, provisioning and profile handling can turn into recurring operational debt. In 4.21, we shipped improvements to provisioning profile handling that reduce manual steps and help prevent devices going offline when profiles become invalid and need re-validation.

Another example of “remove the sharp edge”: in 4.22, deviceConnect automatically enables Developer Mode for iOS/iPadOS 16+ devices when connecting them to the Mac mini host. This reduces setup friction and eliminates a common cause of device onboarding failure.

When platform vendors change the rules, it affects every device lab and every test workflow. In 4.19.6, we addressed changes to iOS app signing related to Apple’s wildcard restrictions and provided guidance on transitioning to partial wildcards.

None of this is flashy. All of it matters.

This is the kind of reliability work that makes mobile testing tools feel “boring” in the best way. You click Start. It starts. You run again. It runs again.

That is the standard.

Reliability is table stakes. Responsiveness is what makes the platform feel trustworthy in the moment.

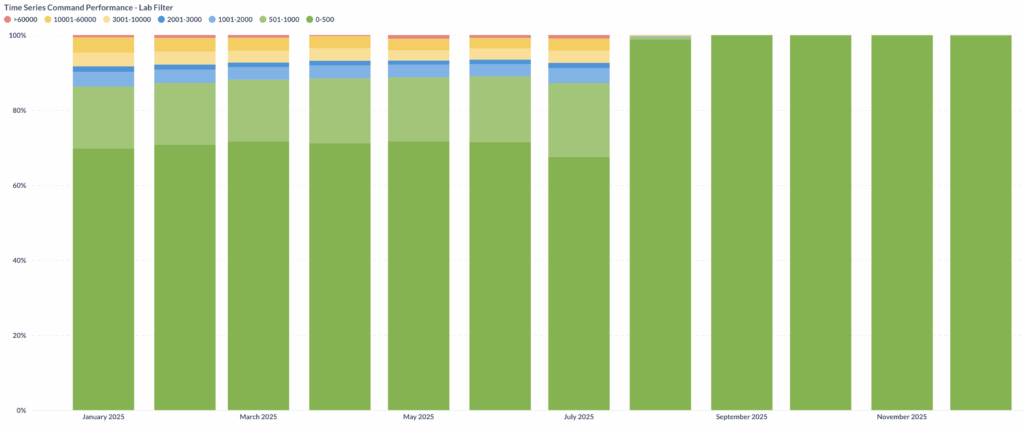

In 2025, we made significant performance improvements to manual testing. By the end of the year, we achieved 99.9% of manual test steps completing within 500 milliseconds.

That is not a vanity metric. It is a user experience metric.

Manual testing is still a huge part of modern QA, even inside organizations with sophisticated automation. People debug failures manually. They reproduce issues manually. They validate “last mile” UX manually. When the platform introduces lag, testers slow down, lose confidence, and waste time.

We also shipped platform improvements aimed at making manual sessions more stable and responsive, including reduced command latency (by optimizing screenshot size and transmission) and improved touch responsiveness.

The outcome we cared about was simple: when a tester interacts with a real device remotely, the system should keep up with them. Consistently.

2025 was not only about stability and performance. It was also about investing in differentiated capabilities that matter to teams trying to scale quality.

Here are three themes that shaped a lot of our product work.

A common reality we see across enterprises: the team has lots of manual test coverage, but automation coverage is patchy or brittle. This is one of the biggest reasons teams plateau. They want to do mobile automation testing, but the path from intent to reliable execution is steep.

In 2025, we continued investing in reducing that gap:

This matters because Appium testing only helps teams when it is maintainable and repeatable. If your automation is constantly breaking on locator changes, or your scripts are hard to share across environments, you do not have automation. You have a treadmill.

That is why we also invested in…

A major barrier to scaling automation is that teams already have a lot invested in existing Appium frameworks. If a platform only works for “new scripts” or only for “generated scripts,” adoption slows down.

In 4.20, we expanded Appium Self-Healing to allow an automation session to be used as the baseline, enabling teams to get self-healing benefits without rewriting their existing scripts. The release notes include the mechanics: baseline session ID, enabling Appium Self-Healing, and how changes are surfaced back in Session Explorer.

This is the kind of capability that pays for itself in hours, not weeks.

When a locator changes and a run fails, you are not just losing a test run. You are burning engineer time. Over and over.

Reducing that waste is one of the highest ROI investments in mobile automation testing.

Even teams with good automation hit another ceiling: speed.

Your CI pipeline runs too long. Your feedback loop slows. Your team starts running fewer tests or splitting coverage across releases. That is how quality debt accumulates.

In 4.22, we introduced Appium Turbo Test Execution, designed to reduce client-server communication overhead so scripts run faster with no code changes. The release notes position it as improving overall test cycle time, especially when paired with Kobiton’s optimized Appium runtime.

This is where the future of automation goes: you should not need a specialized team just to keep automation fast and stable. The platform should handle more of that burden.

Mobile testing is not only taps and swipes. For many teams, the hardest parts to test are the parts that involve audio, accessibility requirements, and real-world conditions.

In 2025, we expanded support for audio injection in a way that is practical for enterprise environments:

The pattern here is important: we do not ship “checkbox features.” We ship capabilities that reflect real enterprise constraints, including controlled environments, hardware requirements, and clear compatibility boundaries.

Audio injection also ties directly to the broader trend we see in accessibility and compliance-driven mobile application testing. A testing platform should help teams validate experiences that real users depend on, including users who rely on assistive technologies and audio-driven workflows.

Many testing platforms focus almost exclusively on QA workflows. In 2025, we continued investing in developer access patterns too, because that is where quality becomes real.

Two themes stood out:

Developers want access to real devices without being forced into a different toolchain. virtualUSB remains a key part of that story, letting engineers integrate real devices into day-to-day debugging and investigation workflows.

In 4.21 and 4.21.1S, we released the Kobiton Command Line Interface (CLI), positioned for enterprise environments that need to operate devices in batches, support scripting, and manage multiple devices more efficiently. The release notes also define what a CLI session is and what it is not (for example: no Session Explorer, limited logs, and timeouts that can be managed via kobiton session ping).

This is a practical investment in developer velocity, lab operations, and repeatable workflows.

It is also a foundation we will build on in early 2026. We are not going to preview specifics here, but the direction is simple: tighter integration between remote devices and the tools developers already use, with less friction and more control.

Adoption is not the goal. It is a signal that the product is earning trust.

In 2025, adoption continued to expand across environments. Our multi-tenant cloud alone added 7,460 new users, and that figure does not include our on-premises or dedicated cloud deployments, which represent more than half of Kobiton’s business.

We track adoption because it is the leading indicator of something more important: teams making real mobile quality work repeatable inside their release cadence.

If you want the honest truth about adoption: you do not earn it with a feature checklist. You earn it by being dependable. Then fast. Then differentiated.

Rather than dumping a wall of release notes, here are a few 2025 highlights that reflect the bigger themes above:

That is the story: platform fundamentals and differentiated capability, shipped in a way that supports real workflows.

A year-in-review should include the lessons, not just the wins. Here are a few that shaped our approach:

Customers do not want a stream of features if the core platform is inconsistent. Reliability improvements create compounding returns because they reduce the cost of everything else: support load, operational overhead, and time wasted on debugging the platform.

Manual testing performance is not cosmetic. It is the difference between a tester trusting their tool and treating it as an obstacle. Hitting 99.9% of manual test steps within 500ms is not just about speed. It is about predictability.

Most teams do not fail at automation because they lack intelligence. They fail because the cost of maintenance is too high. Investments like self-healing for existing scripts and faster execution without rewrites are “practical innovation,” and that is where we will keep leaning.

We are entering 2026 with a clear focus: keep making mobile testing repeatable at scale.

That means continuing to raise the bar on:

If you build mobile apps, you already have enough uncertainty. Your mobile testing tools should not add to it.

To our customers: thank you for trusting us with your releases, and for pushing us to solve real problems, not hypothetical ones.

To our employees: thank you for the discipline and craftsmanship you brought to 2025. You built foundations that will support everything we do next.

To our partners: thank you for helping us extend the platform into real workflows and for investing with us in better outcomes for teams doing mobile app testing, mobile application testing, and appium testing at scale.

We are proud of what shipped in 2025. More importantly, we are proud of what it enables: teams shipping better mobile experiences with confidence.

If you want to talk about any of the themes in this post, or how to apply them inside your organization’s mobile automation testing workflow, reach out via our support site or send an email to support@kobiton.com. We are here to help.